Exploració per autor "Ruiz Costa-jussà, Marta"

Ara es mostren els items 1-3 de 3

-

Explaining how transformers use context to build predictions

Ferrando Monsonís, Javier; Gallego Olsina, Gerard Ion; Tsiamas, Ioannis; Ruiz Costa-jussà, Marta (Association for Computational Linguistics, 2023)

Ferrando Monsonís, Javier; Gallego Olsina, Gerard Ion; Tsiamas, Ioannis; Ruiz Costa-jussà, Marta (Association for Computational Linguistics, 2023)

Comunicació de congrés

Accés obertLanguage Generation Models produce words based on the previous context. Although existing methods offer input attributions as explanations for a model’s prediction, it is still unclear how prior words affect the model’s ... -

Explaining how transformers use context to build predictions

Ferrando Monsonís, Javier; Gallego Olsina, Gerard Ion; Tsiamas, Ioannis; Ruiz Costa-jussà, Marta (2023-05-21)

Ferrando Monsonís, Javier; Gallego Olsina, Gerard Ion; Tsiamas, Ioannis; Ruiz Costa-jussà, Marta (2023-05-21)

Report de recerca

Accés obertLanguage Generation Models produce words based on the previous context. Although existing methods offer input attributions as explanations for a model's prediction, it is still unclear how prior words affect the model's ... -

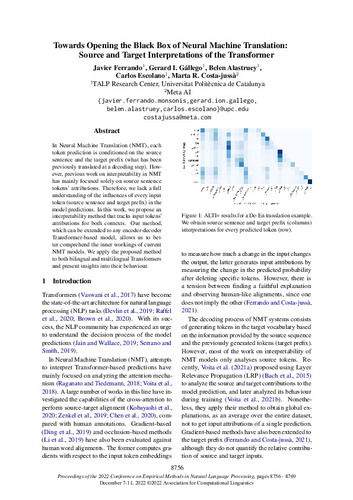

Towards opening the black box of neural machine translation: source and target interpretations of the transformer

Ferrando Monsonís, Javier; Gallego Olsina, Gerard Ion; Alastruey Lasheras, Belen; Escolano Peinado, Carlos; Ruiz Costa-jussà, Marta (Association for Computational Linguistics, 2022)

Ferrando Monsonís, Javier; Gallego Olsina, Gerard Ion; Alastruey Lasheras, Belen; Escolano Peinado, Carlos; Ruiz Costa-jussà, Marta (Association for Computational Linguistics, 2022)

Text en actes de congrés

Accés obertIn Neural Machine Translation (NMT), each token prediction is conditioned on the source sentence and the target prefix (what has been previously translated at a decoding step). However, previous work on interpretability ...